Integration testing with ScalaTest, MongoDB and Play!

Experience from Play! project

By Michal Bigos / @teliatko

Agenda

- Integration testing, why and when

- ScalaTest for integration testing with MongoDB and Play!

- Custom DSL for integration testing and small extensions to Casbah

Context

From where this all came from...

- Social network application with mobile clients

- Build on top of Play! 2

- Core API = REST services

- MongoDB used as main persistent store

- Hosted on Heroku

- Currently in beta

From where this all came from...

- Social network application with mobile clients

- Build on top of Play! 2

- Core API = REST services

- MongoDB used as main persistent store

- Hosted on Heroku

- Currently in beta

Integration testing, why and when?

Part One

Definition

The phase in software testing in which individual software modules are combined and tested as a group.

Another one :)

Testing business components, in particular, can be very challenging. Often, a vanilla unit test isn't sufficient for validating such a component's behavior. Why is that? The reason is that components in an enterprise application rarely perform operations which are strictly self-contained. Instead, they interact with or provide services for the greater system.

Unit tests 'vs' Integration tests

Unit tests properties:

Isolated - Checking one single concern in the system. Usually behavior of one class.

Repeateable - It can be rerun as meny times as you want.

Consistent - Every run gets the same results.

Fast - Because there are loooot of them.

Unit tests 'vs' Integration tests

Unit tests techniques:

- Mocking

- Stubing

- xUnit frameworks

- Fixtures in code

Unit tests 'vs' Integration tests

Integration tests properties:

Not isolated - Do not check the component or class itself, but rather integrated components together (sometimes whole application).

Slow - Depend on the tested component/sub-system.

Unit tests 'vs' Integration tests

Various integration tests types:

- Data-driven tests - Use real data and persistent store.

- In-container tests - Simulates real container deployment, e.g. JEE one.

- Performance tests - Simulate traffic growth.

- Acceptance tests - Simulate use cases from user point of view.

Unit tests 'vs' Integration tests

Known frameworks:

- Data-driven tests - DBUnit, NoSQL Unit...

- In-container tests - Arquillian...

- Performance tests - JMeter...

- Acceptance tests - Selenium, Cucumber...

Why and when ?

What cannot be written/simulated in unit test

- Interaction with resources or sub-systems provided by container.

- Interaction with external systems.

- Usage of declarative services applied to component at runtime.

- Testing whole scenarions in one test.

- Architectural constraints limits isolation.

Our case

Architectural constraints limiting isolation:

Lack of DI

object CheckIns extends Controller {

...

def generate(pubId: String) = Secured.withBasic { caller: User =>

Action { implicit request =>

val pubOpt = PubDao.findOneById(pubId)

...

}

}

}

Controller depends directly on DAO

object PubDao extends SalatDAO[Pub, ObjectId](MongoDBSetup.mongoDB("pubs")) {

...

}

Our case

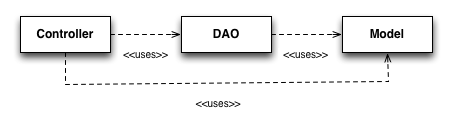

Dependencies between components:

Our case

Goals:

- Integration tests with real DAOs and DB

- Writing them like unit tests

ScalaTest for integration testing with MongoDB and Play!

Part Two

Testing strategy

Responsibility - encapsulate domain logic

Unit test - testing the correctness of domain logic

Testing strategy

Responsibility - read/save model

Integration test - testing the correctness of queries and modifications, with real data and DB

Testing strategy

Responsibility - serialize/deserialize model to JSON

Integration test - testing the correctness of JSON output, using the real DAOs

Testing frameworks

ScalaTest

- Standalone xUnit framework

- Can be used within JUnit, TestNG...

- Pretty DSLs for writing test, especially

FreeSpec - Personal preference over specs2

- Hooks for integration testing

BeforeAndAfterandBeforeAndAfterAlltraits

Testing frameworks

Play!'s testing support

Fake application

it should "Test something dependent on Play! application" in {

running(FakeApplication()) {

// Do something which depends on Play! application

}

}

Real HTTP server

"run in a server" in {

running(TestServer(3333)) {

await(WS.url("http://localhost:3333").get).status must equalTo(OK)

}

}

Testing frameworks

Data-driven tests for MongoDB

- jmockmongo - Mock implementation of the MongoDB protocol and works purely in-memory.

- NoSQL Unit - More general library for testing with various NoSQL stores. It can provide mocked or real MongoDB instance. Relies on JUnit rules.

- EmbedMongo - Platform independent way of running local MongoDB instances.

Application code

Configuration of MongoDB in application

trait MongoDBSetup {

val MONGODB_URL = "mongoDB.url"

val MONGODB_PORT = "mongoDB.port"

val MONGODB_DB = "mongoDB.db"

}

object MongoDBSetup extends MongoDBSetup {

private[this] val conf = current.configuration

val url = conf.getString(MONGODB_URL).getOrElse(...)

val port = conf.getInt(MONGODB_PORT).getOrElse(...)

val db = conf.getString(MONGODB_DB).getOrElse(...)

val mongoDB = MongoConnection(url, port)(db)

}

... another object

Application code

Use of MongoDBSetup in DAOs

object PubDao extends SalatDAO[Pub, ObjectId](MongoDBSetup.mongoDB("pubs")) {

...

}

We have to mock or provide real DB to test the DAO

Application code

Controllers

object CheckIns extends Controller {

...

def generate(pubId: String) = Secured.withBasic { caller: User =>

Action { implicit request =>

val pubOpt = PubDao.findOneById(pubId)

...

}

}

}

... you've seen this already

Our solution

Embedding embedmongo* to ScalaTest

trait EmbedMongoDB extends BeforeAndAfterAll { this: BeforeAndAfterAll with Suite =>

def embedConnectionURL: String = { "localhost" }

def embedConnectionPort: Int = { 12345 }

def embedMongoDBVersion: Version = { Version.V2_2_1 }

def embedDB: String = { "test" }

lazy val runtime: MongodStarter = MongodStarter.getDefaultInstance

lazy val mongodExe: MongodExecutable = runtime.prepare(new MongodConfig(embedMongoDBVersion, embedConnectionPort, true))

lazy val mongod: MongodProcess = mongodExe.start()

override def beforeAll() {

mongod

super.beforeAll()

}

override def afterAll() {

super.afterAll()

mongod.stop(); mongodExe.stop()

}

lazy val mongoDB = MongoConnection(embedConnectionURL, embedConnectionPort)(embedDB)

}

*we love recursion in Scala isn't it?

Our solution

Custom fake application

trait FakeApplicationForMongoDB extends MongoDBSetup { this: EmbedMongoDB =>

lazy val fakeApplicationWithMongo = FakeApplication(additionalConfiguration = Map(

MONGODB_PORT -> embedConnectionPort.toString,

MONGODB_URL -> embedConnectionURL,

MONGODB_DB -> embedDB

))

}

Trait configures fake application instance for embedded MongoDB instance. MongoDBSetup consumes this values.

Our solution

Typical test suite class

class DataDrivenMongoDBTest extends FlatSpec

with ShouldMatchers

with MustMatchers

with EmbedMongoDB

with FakeApplicationForMongoDB {

...

}

Our solution

Test method which uses mongoDB instance directly

it should "Save and read an Object to/from MongoDB" in {

// Given

val users = mongoDB("users") // this is from EmbedMongoDB trait

// When

val user = User(username = username, password = password)

users += grater[User].asDBObject(user)

// Then

users.count should equal (1L)

val query = MongoDBObject("username" -> username)

users.findOne(query).map(grater[User].asObject(_)) must equal (Some(user))

// Clean-up

users.dropCollection()

}

Our solution

Test method which uses DAO via fakeApplicationWithMongo

it should "Save and read an Object to/from MongoDB which is used in application" in {

running(fakeApplicationWithMongo) {

// Given

val user = User(username = username, password = password)

// When

UserDao.save(user)

// Then

UserDao.findAll().find(_ == user) must equal (Some(user))

}

}

Our solution

Example of the full test from controller down to model

class FullWSTest extends FlatSpec with ShouldMatchers with MustMatchers with EmbedMongoDB with FakeApplicationForMongoDB {

val username = "test"

val password = "secret"

val userJson = """{"id":"%s","firstName":"","lastName":"","age":-1,"gender":-1,"state":"notFriends","photoUrl":""}"""

"Detail method" should "return correct Json for User" in {

running(TestServer(3333, fakeApplicationWithMongo)) {

val users = mongoDB("users")

val user = User(username = username, password = md5(username + password))

users += grater[User].asDBObject(user)

val userId = user.id.toString

val response = await(WS.url("http://localhost:3333/api/user/" + userId)

.withAuth(username, password, AuthScheme.BASIC)

.get())

response.status must equal (OK)

response.header("Content-Type") must be (Some("application/json; charset=utf-8"))

response.body must include (userJson.format(userId))

}

}

}

Custom DSL for integration testing and small extensions to Casbah

Part Three

WORK IN PROGRESSMore data

Creating a simple data is easy, but what about collections...

We need easy way to seed them from prepared source and check them afterwards.

Custom DSL for seeding the data

Principle

- Seed the data before test

- Use them in test ... read, create or modify

- Check them after test (optional)

Custom DSL for seeding the data

Inspiration - NoSQL Unit, DBUnit

public class WhenANewBookIsCreated {

@ClassRule

public static ManagedMongoDb managedMongoDb = newManagedMongoDbRule().mongodPath("/opt/mongo").build();

@Rule

public MongoDbRule remoteMongoDbRule = new MongoDbRule(mongoDb().databaseName("test").build());

@Test

@UsingDataSet(locations="initialData.json", loadStrategy=LoadStrategyEnum.CLEAN_INSERT)

@ShouldMatchDataSet(location="expectedData.json")

public void book_should_be_inserted_into_repository() {

...

}

}

Based on JUnit rules or verbose code

This is Java. Example is taken from NoSQL Unit documentation.Custom DSL for seeding the data

Goals

- Pure functional solution

- Better fit with ScalaTest

- JUnit independent

Custom DSL for seeding the data

Result

it should "Load all Objcts from MongoDB" in {

mongoDB seed ("users") fromFile ("./database/data/users.json") and

seed ("pubs") fromFile ("./database/data/pubs.json")

cleanUpAfter {

running(fakeApplicationWithMongo) {

val users = UserDao.findAll()

users.size must equal (10)

}

}

// Probably will be deprecated in next versions

mongoDB seed ("users") fromFile ("./database/data/users.json") now()

running(fakeApplicationWithMongo) {

val users = UserDao.findAll()

users.size must equal (10)

}

mongoDB cleanUp ("users")

}

Custom DSL for seeding the data

Already implemented

- Seeding, clean-up and clean-up after for functional and non-funtional usage.

- JSON fileformat similar to NoSQL Unit - difference, per collection basis.

Custom DSL for seeding the data

Still in pipeline

- Checking against dataset, similar to @ShouldMatchDataSet annotation of NoSQL Unit.

- JS file format of

mongoexport. Our biggest problem here are Dates (proprietary format). - JS file format with full JavaScript functionality of

mongocommand. To be able to run commands like:db.pubs.ensureIndex({loc : "2d"}) - NoSQL Unit JSON file format with multiple collections and seeding more collections in once.

Topping

Small additions to Casbah for better query syntax

We don't like this*

def findCheckInsBetweenDatesInPub(

pubId: String,

dateFrom: LocalDateTime,

dateTo: LocalDateTime) : List[CheckIn] = {

val query = MongoDBObject("pubId" -> new ObjectId(pubId), "created" -> MongoDBObject("$gte" -> dateFrom, "$lt" -> dateTo))

collection.find(query).map(grater[CheckIn].asObject(_)).toList.headOption

}

... I cannot read it, can't you?

* and when possible we don't write thisTopping

Small additions to Casbah for better query syntax

We like pretty code a lot ... like this:

def findBetweenDatesForPub(pubId: ObjectId, from: DateTime, to: DateTime) : List[CheckIn] = {

find {

("pubId" -> pubId) ++

("created" $gte from $lt to)

} sort {

("created" -> -1)

}

}.toList.headOption

Casbah query DSL is our favorite ... even when it is not perfect

Topping

Small additions to Casbah for better query syntax

So we enhanced it:

def findBetweenDatesForPub(pubId: ObjectId, from: DateTime, to: DateTime) : List[CheckIn] = {

find {

("pubId" $eq pubId) ++

("created" $gte from $lt to)

} sort {

"created" $eq -1

}

}.headOption

Topping

Small additions to Casbah for better query

Pimp my library again and again...

// Adds $eq operator instead of ->

implicit def queryOperatorAdditions(field: String) = new {

protected val _field = field

} with EqualsOp

trait EqualsOp {

protected def _field: String

def $eq[T](target: T) = MongoDBObject(_field -> target)

}

// Adds Scala collection headOption operation to SalatCursor

implicit def cursorAdditions[T <: AnyRef](cursor: SalatMongoCursor[T]) = new {

protected val _cursor = cursor

} with CursorOperations[T]

trait CursorOperations[T <: AnyRef] {

protected def _cursor: SalatMongoCursor[T]

def headOption : Option[T] = if (_cursor.hasNext) Some(_cursor.next()) else None

}

Thanks for your attention